Many a times data—or how you interpret it—is misleading?

And as a data scientist, your job is to extract meaningful insights from data.

But, biases can silently creep into the models, leading to inaccurate predictions and poor decision-making.

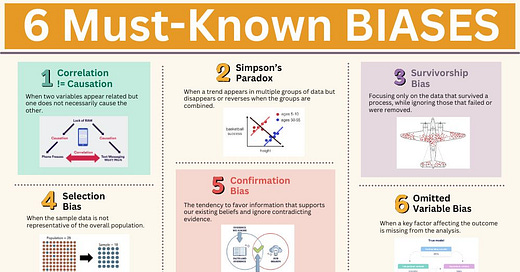

So, let’s explore 6 most critical biases you must watch out for and how to handle them.

⏸️ Quick Pause: If you’re new here, I’d highly appreciate if you subscribe to recieve bi-weekly data tips and insights — directly into your inbox. 👇🏻

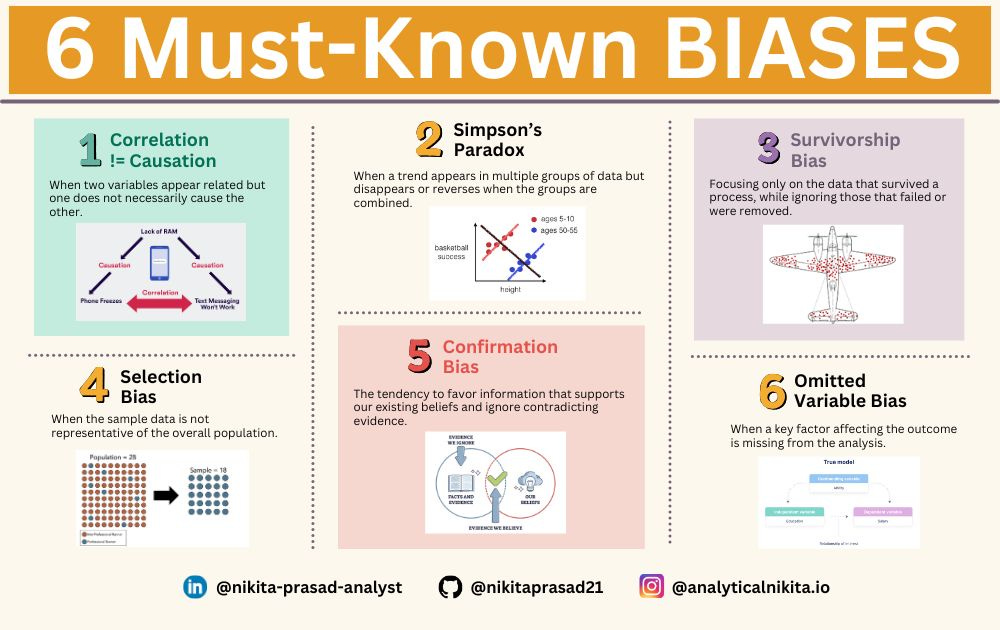

1. Correlation vs. Causation Fallacy – Not Everything That Moves Together Is Connected

A company notices that employees who wear headphones are more productive.

But does wearing headphones cause productivity, or are highly focused employees more likely to wear headphones?

This is the classic scenario of confusing correlation (two things happening together) with causation (one thing directly causing the other).

How to avoid it?

Always try to answer, Could there be third factor influencing both variables?

Use controlled experiments (e.g., A/B testing) to establish causation.

BONUS READ: 👇🏻

2. Simpson’s Paradox – When Trends Trick You

Imagine you’re analyzing hospital performance and find that Hospital A has a higher survival rate than Hospital B.

Seems simple and clear, right?

But when you break it down by patient severity, Hospital B actually has better survival rates for both mild and critical cases.

What happened?

This is called Simpson’s Paradox.

When data is grouped incorrectly, your trend might be positive at the global level, but when you break it down, the trends may reverse.

The problem arises because different sub-groups may behave differently.

How to avoid it?

Always segment your data to check if trends hold across different groups.

Look at confounding variables—hidden factors that could explain the results.

3. Survivorship Bias – The Data You Don’t See Matters

During World War II, engineers studied bullet holes on returning planes to decide where to add armor.

They initially thought to reinforce the areas with the most bullet holes.

But they were missing a key insight—planes hit in other areas never returned.

This is survivorship bias—focusing only on successful cases while ignoring failures.

How to avoid it?

Consider thinking out of the box like what’s missing from the dataset, before drawing conclusions.

Look at failure cases as well, not just success stories.

4. Selection Bias – The Wrong Sample, the Wrong Conclusions

Imagine you analyze customer feedback and find that 95% of users love your app.

You conclude that your product is performing exceptionally well.

However, your data only includes responses from active users—those who already enjoy the app.

The problem?

You're missing feedback from users who churned or never engaged in the first place.

If you only analyze feedback from happy users, you might overestimate customer satisfaction and miss critical pain points that drive users away.

This is selection bias—when your sample doesn’t represent the entire population.

How to avoid it?

Use random sampling to ensure diverse representation.

Be mindful of who is excluded from the dataset.

5. Confirmation Bias – Seeing What You Want to See

People who believe in a conspiracy theory tend to mostly read sources that support it.

That’s confirmation bias—favoring information that confirms your existing beliefs while ignoring contradictory evidence.

In data science, this happens when you test models but only report metrics that support your hypothesis.

How to avoid it?

Challenge your assumptions—ask, What would prove me wrong?

Perform A/B testing and validate results using multiple methods.

6. Omitted Variable Bias – The Missing Piece of the Puzzle

A study finds that students who drink coffee score higher on exams.

But it ignores the fact that students who drink coffee might also study more.

Omitted variable bias happens when you ignore an important factor that affects both the cause and effect.

How to avoid it?

Identify all possible influences before drawing conclusions.

Use domain expertise and statistical tests to check for missing variables.

I hope this guide helped you gain a deeper understanding of biases and their impact on building robust models also produce accurate, fair insights.

REMEMBER:

to always question your data,

validate your assumptions,

look for bigger picture rather than what’s easy to measure, and

be wary of drawing conclusions from observational data alone.

Comment Down 👇: Have you ever made a decision based on data that later turned out to be misleading?

Until next time, happy learning!