How to Prevent Gradient Boosting Model from Overfitting?

...The Most Important Interview Question!

A good model should learn — not memorize.

That’s how you build models that are smart, simple, and ready for real-world problems.

Let’s find out how can you stop a Gradient Boosting Model from overfitting.

Make sure to hit that Subscribe button, so you don’t miss when I put out any new posts!

What is Gradient Boosting Algorithm?

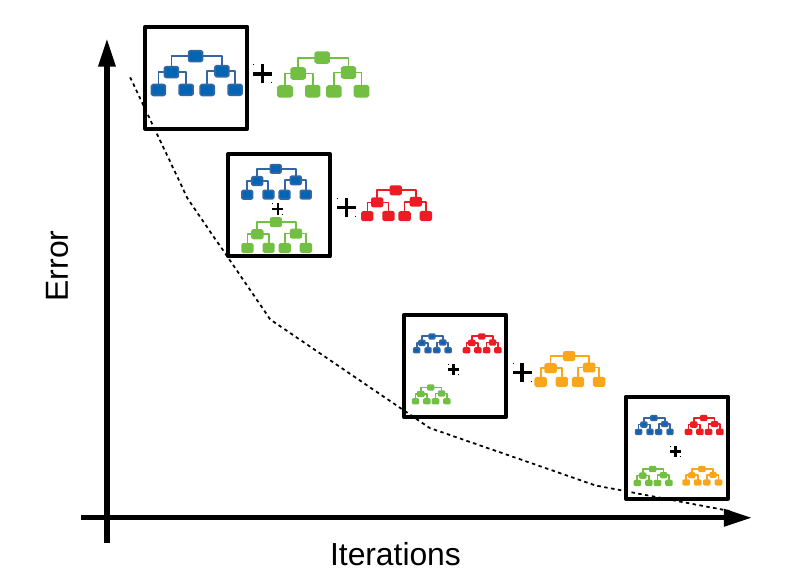

It is an ensemble technique that combines the predictions of multiple weak learners, typically decision trees, sequentially to create a single and more accurate strong learner.

It can be used for classification and regression tasks. 👈

Advantages of Gradient Boosting Model:

Robustness to missing values and outliers

Effective handling of high cardinality categorical features

How to prevent Overfitting in GBM?

Let’s discuss three important hyper-parameters provided by Sklearn to stop a Gradient Boosting model from overfitting.

1. Using Fewer Trees

In Gradient Boosting, you build models called trees one after another.

If you build too many trees, the model can become too complex.

Building fewer trees can help keep the model simple and general.

You can control the number of trees by setting a number using n_estimators parameter.

from sklearn.ensemble import GradientBoostingClassifier

gbm = GradientBoostingClassifier(n_estimators=20)Here, you tell the model to build only 20 trees.

2. Make Trees Shorter

Shorter trees mean the model learns simple patterns, not complicated noise.

You can control how tall or deep the trees are by setting max_depth.

gbm = GradientBoostingClassifier(max_depth=3)A depth of 3 means the tree is not allowed to ask too many questions before making a decision.

3. Slow Down the Learning

Another way to prevent overfitting is to make the model learn slowly.

You can do this by setting a smaller learning_rate.

gbm = GradientBoostingClassifier(learning_rate=0.1)If the learning rate is too high, the model can jump too quickly to conclusions.

But small learning rate makes the model take small careful steps.

Tip: If you lower the learning rate, you might need more trees to reach good performance.

If you’d like to explore the full implementation, including code and data, then checkout: Github Repository 👈🏻

Until next time, happy learning!