Understanding Type I and Type II Errors in Hypothesis Testing

...The Most Important Interview Question!

Without a solid understanding of statistics, it can be challenging to design effective A/B tests and accurately interpret the results.

Even after following all the required steps, your test result might be skewed by errors that unknowingly creep into the process.

These errors — Type I and Type II Errors — can lead to incorrect conclusions, causing you to declare a false result.

This misinterpretation of A/B test results can misled your entire optimization program, potentially costing you conversions or revenue.

Let’s take a closer look at:

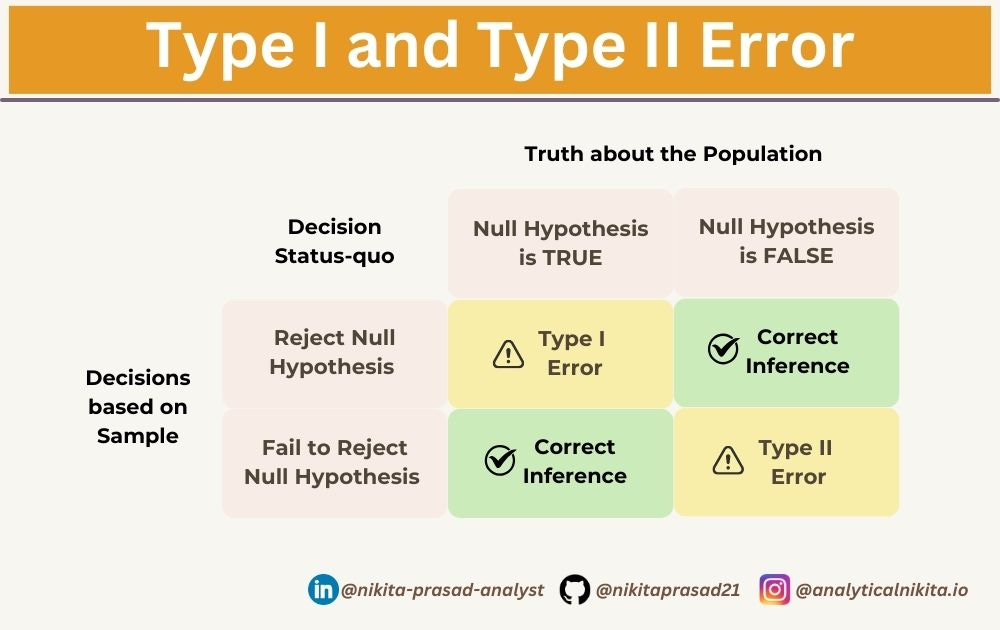

What exactly do we mean by Type I and Type II Errors?

Their consequences, and

How you can minimize them?

By the end of this, you will have a solid understanding of their differences — which is useful for both interviews and real-world decision-making as a Data Scientist.

Many data enthusiasts suffer from dry statistics courses, which often become their least favorite subject. Subscribe as here, I want to make it easy for you. 👇🏻

Type I vs. Type II Errors

A popular data science interview question.

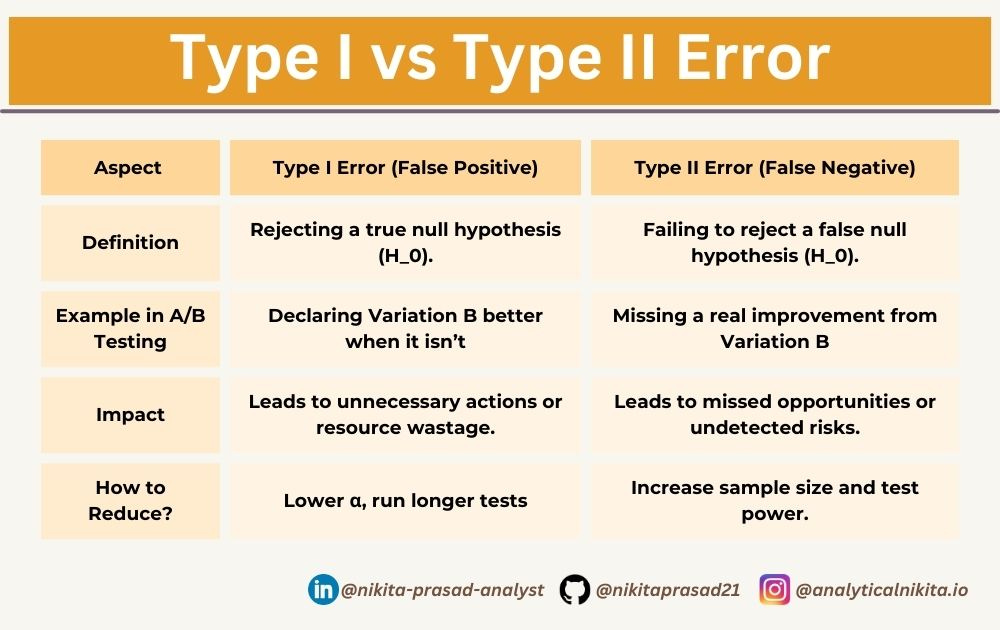

👇🏻This table summarizes the key differences between them:

Let’s discuss the differences below.

What is a Type I Error?

Also known as False Positive, occurs when you incorrectly reject a null hypothesis (H0), when it is actually true.

Simply put, you wrongly conclude that there is a significant effect or difference when, in reality, there is none.

💡 Note: The probability of making this error is alpha (α) — also known as the significance level, which is pre-determined before conducting the test (commonly set at 0.05 or 5% and 0.01 for stricter tests).

Example of Type I Error:

Assume you’re running an A/B test to determine if including a 360-degree product videos feature on the product page increases the conversion rate and overall revenue as compared to a product page with only images, for an E-Commerce Platform.

The null hypothesis (H0), is that the 360-degree product videos feature has no effect on the conversion rate.

The alternative hypothesis (HA), is that the the 360-degree product videos feature increases conversions.

If the test incorrectly rejects (H0), and concludes that the videos feature increases conversions, when it actually doesn’t that’s a Type I Error.

What is a Type II Error?

Also known as False Negative, happens when you fail to reject a null hypothesis (H0), when it is actually false.

This means, you concluded that there is no significant effect or difference, when in reality, there actually is one.

💡 Note: The probability of making a Type II error is denoted as beta (β). The lower the (β), the higher the statistical power of your test (Power = 1- β).

Example of Type II Error:

Let’s consider the same E-commerce A/B Test:

If the test fails to detect a real increase in conversions (even though the video feature actually improves sales), that’s a Type II error.

💡Note: The higher the statistical power of your test, the lower the likelihood of encountering Type II error (False Negative).

If you are running a test at 90% statistical power, there is only a 10% chance of ending up with a false negative.

The statistical power of a test depends on:

the statistical significance threshold (α),

the sample size,

the minimum effect size of interest,

the number of variations of the test.

Can You Guess — Which is Worse?

The impact of both errors depends upon the specific business context.

While Type II errors lead to missed opportunities to follow your hypothesis, at least you don’t implement a costly mistake.

Type I errors are often more damaging because of there negative impact leads to incorrect decisions based on false positives.

Type II errors are less dangerous because you continue using the version that has shown at least some level of success in impacting user experience over time.

Although you miss out on potential conversion gains, you can always re-test later with a larger sample size.

The associated risks are generally lower as compared to the risks associated with implementing changes that negatively impact user experience and the resources you spent to pull off this test..

The trade-off: Lowering (α) reduces Type I Errors and increases (β) the risk of Type II Errors.

Hence, the balance between Type I and Type II errors is managed by choosing an appropriate significance level (α) and conducting power analysis to estimate the probability of a Type II error (β).

The choice of (α) and (β) depends on the specific context and the consequences of making each type of error.

➡️ HERE’s the Step-by-Step Walkthrough to A/B Testing Fundamentals, including detailed overview of all the terms used above and much more.

👉 Over to you:

What other key differences between Type I and II Errors, did I miss?

Drop your thoughts in the comments below!

Thanks for reading!

Until next time, happy learning!