Let’s break down the core of Decision Tree Regression — what it is, how it works, and why it matters, from the perspective of a data scientist, along with a sample problem statement.

By the end of this, you will have a solid grasp on this algorithm — helpful for both interview preparation and day-to-day work as a DS.

Quick Pause: If you’re new here Subscribe — my goal is to make Data Science easy for you. 👇🏻

What is a Decision Tree?

The algorithm uses a tree-like structure for decisions to either predict the target value (regression) or predict the target class (classification).

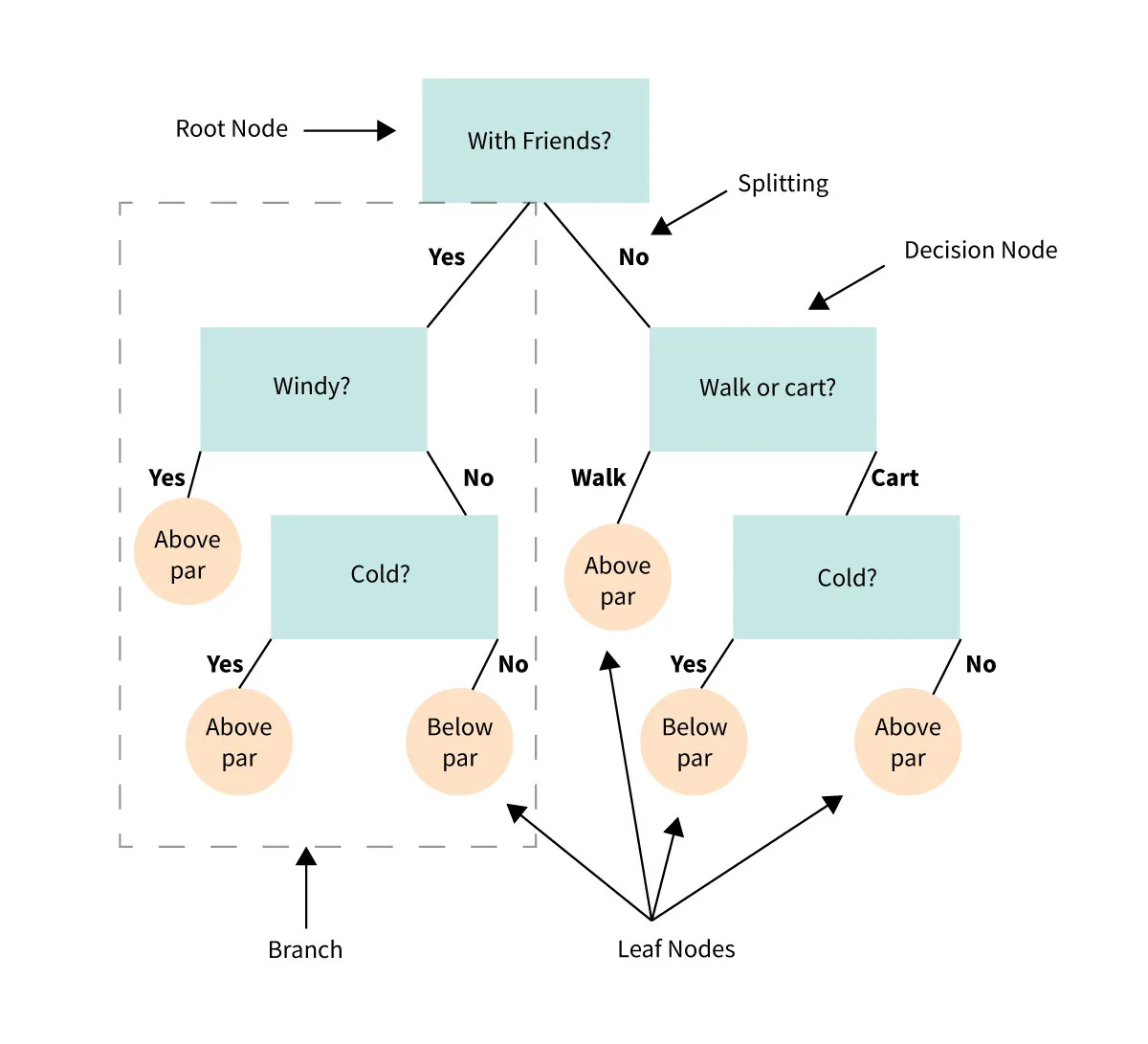

Before diving into how decision trees work, let us become familiar with the basic structure and terminologies of a decision tree:

Root Node: The topmost node representing all data points.

Splitting: It refers to dividing a node into two or more sub-nodes.

Decision Node: Nodes further split into sub-nodes; a split node.

Leaf / Terminal Node: Nodes that do not split; final results.

Branch / Sub-Tree: Subsection of the entire tree.

Parent and Child Node: Parent node divides into sub-nodes; children are the sub-nodes.

Pruning: Removing sub-nodes of a decision node is called pruning. Pruning is often done in decision trees to prevent overfitting.

What Is a Decision Tree Regressor?

A Decision Tree Regressor is used when the target is continuous — such as predicting a house price or stock value.

It learns the input-output relationship by breaking the dataset into smaller segments.

At each node, the algorithm chooses a feature and a split point that minimizes the prediction error.

How It Works

The algorithm observes feature values.

It builds a tree that predicts continuous outputs.

At each split, it tries to reduce the mean squared error (MSE) (or other evaluation metrics.

The process continues until certain stopping criteria are met.

How is Decision Tree Classifier Different?

Classification trees are used to predict categorical data (yes, no), while regression trees are used to predict numerical data, such as the price of a stock.

Classification and regression trees are powerful tools for analyzing data.

Training Decision Trees

A decision tree in general parlance represents a hierarchical series of binary decisions. Including,

Letting the algorithm find the best split at each level.

Building branches until either:

The tree reaches a maximum depth, or

The data in a node is pure enough, or

A minimum number of samples is reached.

Rather than setting rules manually, the algorithm figures out the optimal conditions for splits on its own.

Do not forget to explore the full implementation, including code and data: Github Repository 👈🏻

Alright, that’s a wrap! If you’ve made it this far — thank you! Stay tuned with ME, so you won’t miss out on future updates.

Until next time, happy learning!