One of the most asked data science interview question, Differentiate between Bagging and Boosting.

Today, let’s understand the core differences in how these algorithms work.

And how they relate to two powerful ML algorithms: Random Forest (bagging) and XGBoost (boosting).

Make sure to hit that Subscribe button, so you don’t miss when I put out any new posts!

In machine learning, ensemble methods combine multiple models to improve performance.

Two of the most popular ensemble techniques are bagging and boosting.

Understanding their differences is crucial to choosing the right model for your problem.

Let's explore!

🌲 What is Bagging?

Bagging stands for Bootstrap Aggregating.

The idea is simple:

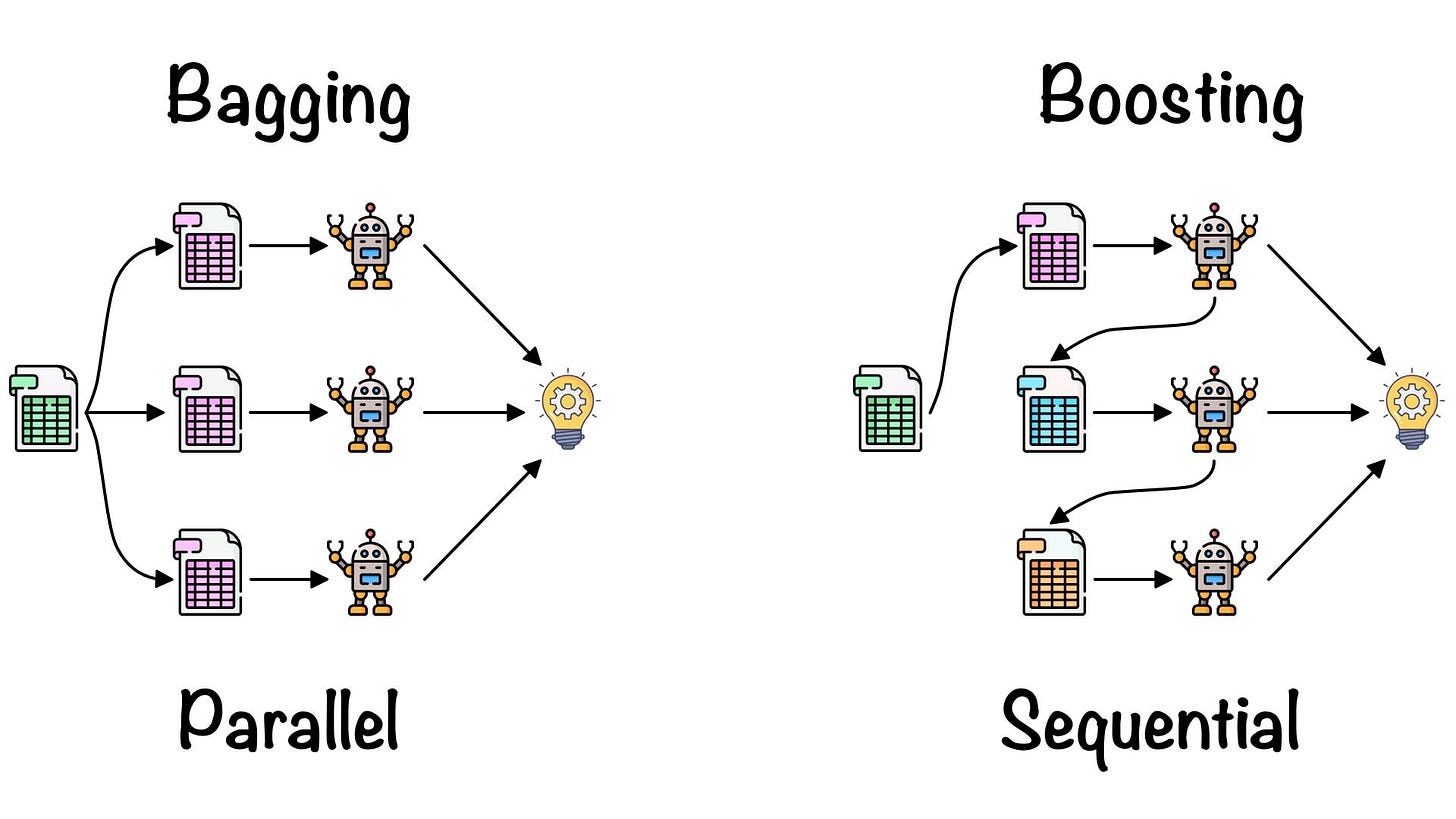

It builds multiple models (usually decision trees) on different random subsets of the data.

These subsets are created through sampling with replacement (i.e. bootstrap sampling).

Predictions are averaged (for regression model) or voted (for classification model).

Random Forest is the most used bagging algorithm. It builds many decision trees and merges them to get a more accurate and stable prediction.

🚀 What is Boosting?

Boosting is a sequential technique:

It builds models one after another, where each new model focuses on the mistakes of the previous one.

Instead of sampling, it adjusts the weights of the training data points.

The final prediction is a weighted sum of all models.

XGBoost (also called, Extreme Gradient Boosting) is a highly efficient and scalable boosting algorithm known for its accuracy and speed.

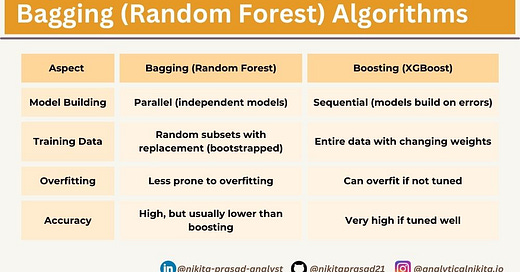

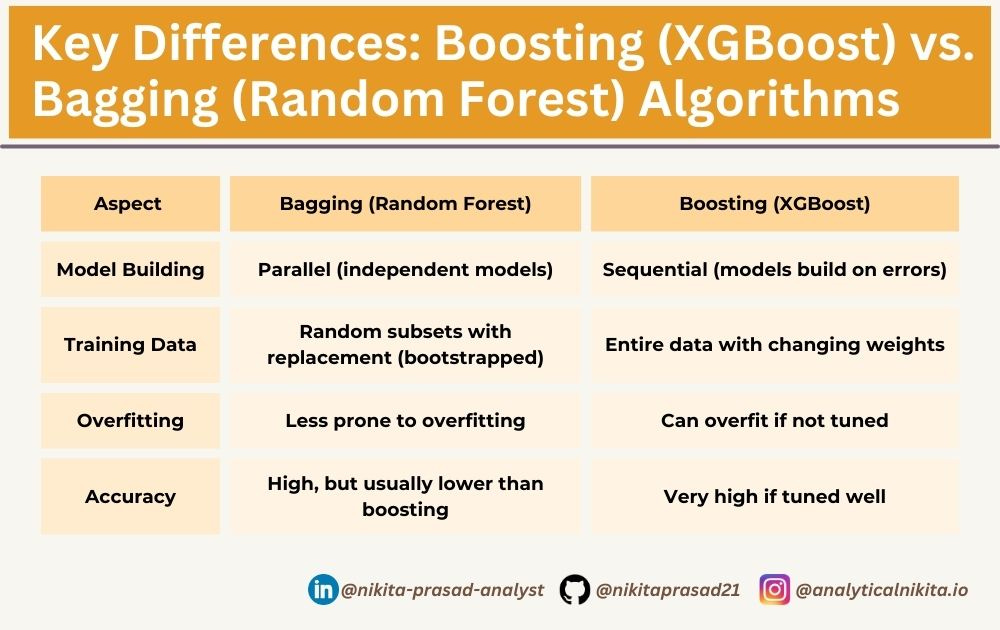

Here’s a side-by-side comparison:

Both Random Forest and XGBoost are powerful algorithms:

Use Random Forest if you want a fast, robust, low bias-low variance model that’s easy to tune and less prone to overfitting.

Use XGBoost if you need maximum predictive power and are ready to spend time tuning the parameters.

In practice, it’s common to try both and compare performance using cross-validation.

If you’d like to explore the full implementation, including code and data, then checkout: Github Repository 👈🏻

Until next time, happy learning!