Sub-Word Tokenization Using Byte-Pair Encoding

Must-Known Deep Dive to SOLVE the OOV Problem Effectively

Previously, we implemented a simple tokenization class.

If you missed it (really?), I would highly recommend you to give it a read 👇🏻:

Working with Text Data - Tokenization

With the rise in LLMs-based developments, where you can build an application (using prompts) with Open Source or Closed-Source Gen-AI models, in a few-weeks, which would have conventionally took several months.

And while you’re at it, subscribe me so as you’ll never miss any more of these contents.

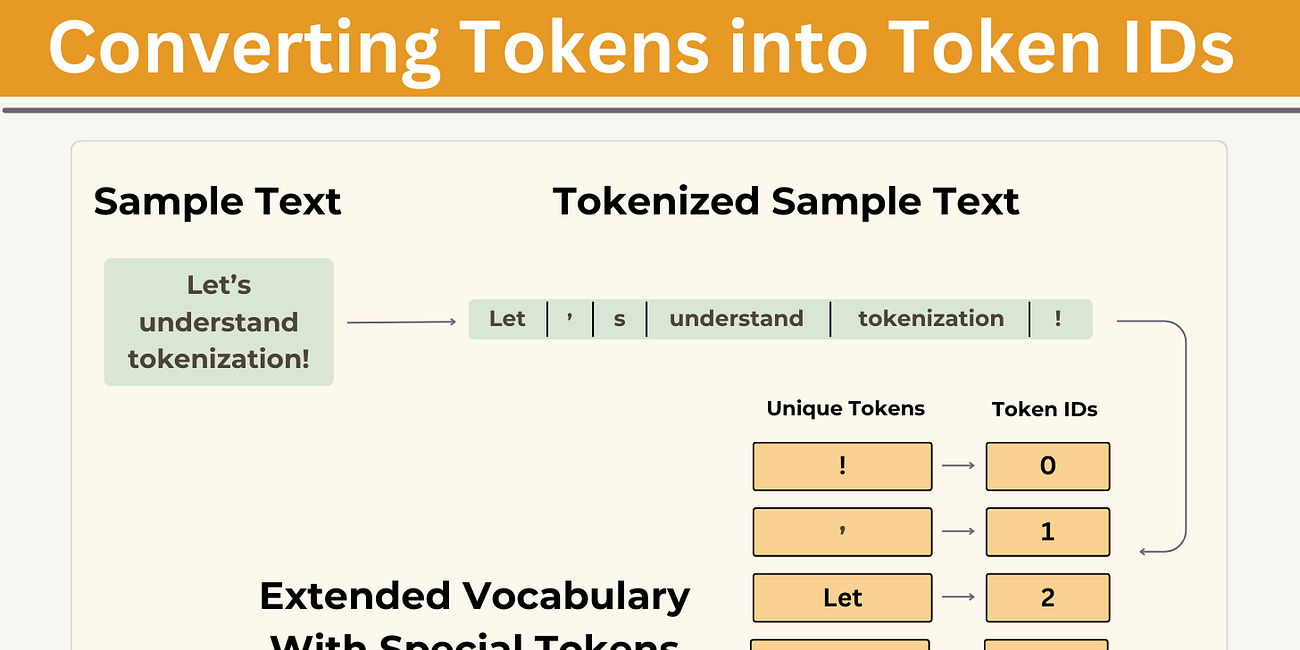

So, by now you must have understood the operations a tokenizer can handle, i.e. tokenization, conversion from tokens to IDs, and converting IDs back to a string.

But, due to Out-of-Vocabulary problem, we weren’t able to generalize our decoded output.

To solve this problem, we can use Sub-Word Tokenization.

03. Sub-Word-Based Tokenization

This is the third type of tokenization technique, you can checkout the other two, here. 👈🏻

Sub-word tokenization algorithms rely on the principle that frequently used words should not be split into smaller sub-words, but rare words should be decomposed into meaningful sub-words.

What is Byte-Pair Encoding?

GPT-2, used Byte-Pair Encoding (BPE) as its tokenizer.

It allows the model to breakdown words that aren't in its predefined vocabulary into smaller sub-word units or even individual characters, enabling it to handle out-of-vocabulary words.

The core idea of BPE is to iteratively merge the most frequent pair of consecutive characters in a text corpus, until the desired vocabulary size is reached.

Hence, the resulting sub-word units can be used to represent the original text in a more efficiently.

How does it achieve this without using `<|unk|>` tokens?

The BPE algorithm, ensures that the most common words in vocabulary are represented as a single token, while rare words are breakdown into two or more sub-words, if the tokenizer encounters an unfamiliar word during tokenization.

For instance, if GPT-2's vocabulary doesn't have the word "analytical-nikita.io", it might tokenize it as ["analytical", "-", "nik", "ita", ".", "io"] or some other sub-word breakdown, depending on its trained BPE merges.

How Byte-Pair Encoding (BPE) works?

As a very simple example, suppose our corpus uses these five words:

"cat", "mat", "fun", "bun", "cats"Assuming the words had the following frequencies:

Corpus (Split into Words): ("cat", 10), ("mat", 6), ("fun", 1), ("bun", 5), ("cats", 3)To represent the text corpus using the sub-word units in the vocabulary.

Initialize the vocabulary with all the unique characters in the text corpus.

Base Vocabulary: ["a", "b", "c", "f", "m", "n", "s", "t", "u"]Calculate the frequency of each character in the text corpus.

Initial Corpus (Split into Characters): ("c", "a", "t", 10), ("m", "a", "t", 6), ("f", "u", "n", 1), ("b", "u", "n", 5), ("c", "a", "t", "s", 3)Repeat the following steps until the desired vocabulary size is reached:

Find the most frequent pair of consecutive characters in the text corpus.

("a", "t"), ("u", "n")Merge the pair to create a new sub-word unit and update the frequency counts of all the characters that contain the merged pair. .

Corpus: ("c, "at", 10), ("m", "at", 6), ("f", "un", 1), ("b", "un", 5), ("c, "at", "s", 3)Add the new sub-word unit to the vocabulary.

Vocabulary: ["a", "b", "c", "f", "m", "n", "s", "t", "u", "at", "un"]

And we continue like this until we reach the desired vocabulary size, similar to this:

Final Vocabulary: ["a", "b", "c", "f", "m", "n", "s", "t", "u", "at", "un", "cat"]

Corpus: ("cat", 10), ("m", "at", 6), ("f", "un", 1), ("b", "un", 5), ("cat", "s", 3)Note: The original BPE tokenizer implementation can be found here.

Since, implementing BPE can be relatively complicated.

In this demonstration, we are using the BPE tokenizer from OpenAI's open-source tiktoken library, which implements its core algorithms in Rust to improve computational performance.

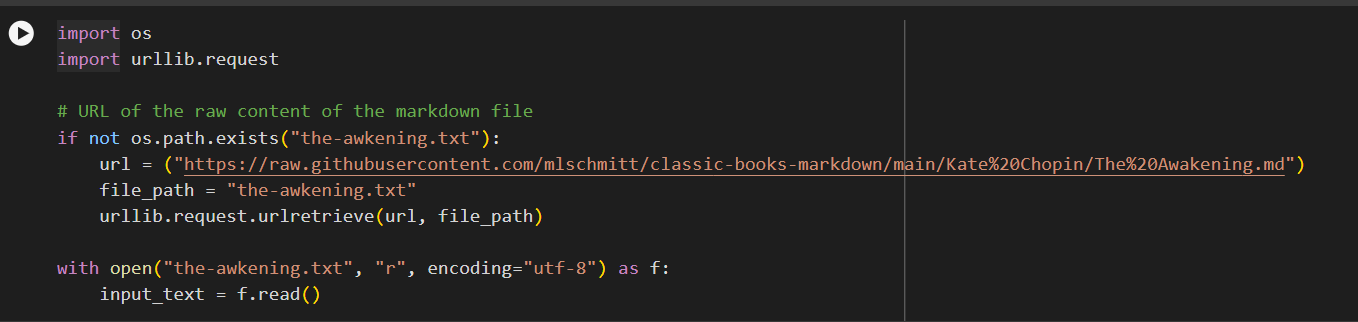

» Importing Text Data

The dataset we will work with is, The Awakening by Kate Chopin, a public domain short story (for understanding purposes), written in 1899, so there is no copyright on that.

Note: It is recommended to be aware and respectful of existing copyrights and people privacy, while preparing datasets for training LLMs.

The goal is to tokenize this input text and embed this for an LLM

» Working with Byte-Pair Encoder Tokenizer

Importing `tiktoken`library

import importlib

import tiktokenOnce installed, we can instantiate the BPE tokenizer from tiktoken as follows:

tokenizer = tiktoken.get_encoding("gpt2")text1 = "Hello, you're learning data science with analytical-nikita.io"

text2 = "In the sunlit terraces of the palace."

text = " <|endoftext|> ".join((text1, text2))Encoding with BPE

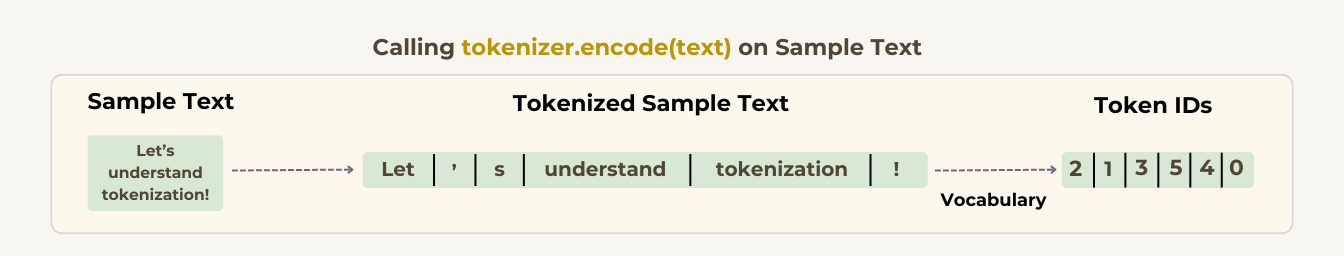

This class have an encode method that splits text into tokens and carries out the string-to-integer mapping to produce token IDs via the vocabulary.

token_ids = tokenizer.encode(text, allowed_special={"<|endoftext|>"})

print(token_ids)The code above prints the following token IDs:

# Output: [15496, 11, 345, 821, 4673, 1366, 3783, 351, 30063, 12, 17187, 5350, 13, 952, 220, 50256, 554, 262, 4252, 18250, 8812, 2114, 286, 262, 20562, 13]The <|endoftext|> token is assigned a relatively large token ID, namely, 50256.

In fact, the BPE tokenizer, which was used to train models such as GPT-2, GPT-3, and the original model used in ChatGPT, has a total vocabulary size of 50,257, with <|endoftext|> being assigned the largest token ID.

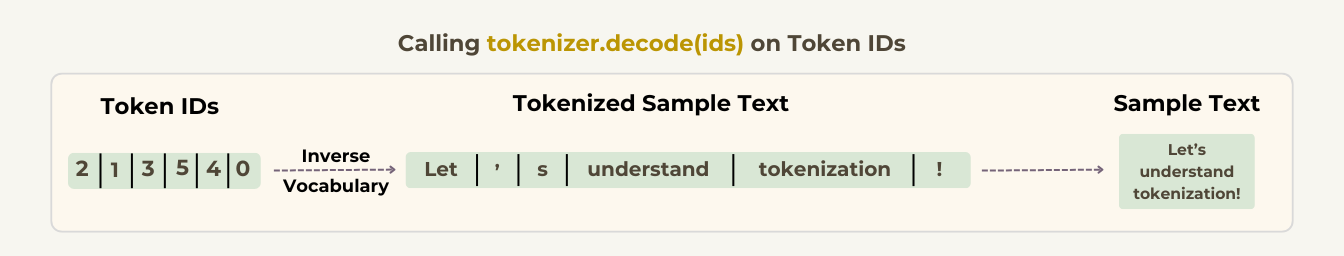

Decoding with BPE

We can then convert the token IDs back into text using the decode method, similar to our SimpleTokenizer that we build earlier:

output_strings = tokenizer.decode(token_ids)

print(output_strings)

# Output: Hello, you're learning data science with analytical-nikita.io <|endoftext|> In the sunlit terraces of the palace.The algorithm underlying BPE breaks down words like analytical-nikita.io that aren't in its predefined vocabulary into smaller subword units or even individual characters.

This enables it to handle out-of-vocabulary words while maintaining meaningful sub-word units.

Further, if you’d like to explore the full implementation, including code and data, then checkout: Github Repository 👈🏻

If you find this read helpful, do not forget to give it a “heart” ❤️ and comment🖋️ down your thoughts!

Until next time, happy learning!